CS-7650 Natural Language Processing

CS-7650 is the newest machine learning OMSCS course that delves into the intricacies of Natural Language Processing, offering a comprehensive exploration of both foundational concepts and contemporary techniques and a history of how we arrived at Large Language Models.

I was lucky enough enroll in it's 2nd iteration for Fall 2023. At the time of writing, the course consists of 6 coding assignments, 6 quizzes, a final project and two exams. The assignments were pretty standard faire and should be pretty straight forward if you're well-versed in PyTorch. I particularily enjoyed the exam structure which was both open book and we were given almost a week to complete our writeups before submitting. I much prefer this structure which better tests your knowledge rather than the more traditional closed book time-limited exam formats that value rote memorization, anxiety management and reading comprehension more than anything else.

Curriculum:

- Foundational Concepts: CS-7650 begins with a solid foundation in neural network basics, ensuring students are well-equipped with the fundamental knowledge required for more advanced topics. Concepts such as tokenization, part-of-speech tagging, and syntactic analysis are covered comprehensively.

- Text Classification: Moving into the realm of natural language processing, the course transitions to text classification, exploring techniques for classifying text using both traditional regression approaches and basic neural network models.

- Recurrent Neural Networks (RNNs): RNNs, a crucial component in NLP, are extensively covered. The course delves into the architecture of RNNs, LSTMs and Seq2Seq models and their ability to handle sequential data, and applications like language modeling.

- Distributional Semantics: The course explores distributional semantics, focusing on representing the meaning of words based on context. Topics include word embeddings, semantic similarity, and methods like Word2Vec and GloVe.

- Transformers: The revolutionary transformers take center stage in this section, with an in-depth exploration of attention mechanisms, transformer architecture, and their applications in tasks like sequence-to-sequence models and language understanding.

- Machine Translation: The classic problem of machine translation is addressed in the context of modern techniques and models. Approaches to machine translation, neural machine translation, and the application of attention mechanisms are covered.

- Current State-of-the-Art NLP Techniques (Meta AI): One of the highlights of CS-7650 is the expertise brought to the table by the state of the art researchers in the field today. Several Facebook research at FAIR present their current research in Question Answering, Text Summarization, Privacy Preservation and Responsible AI.

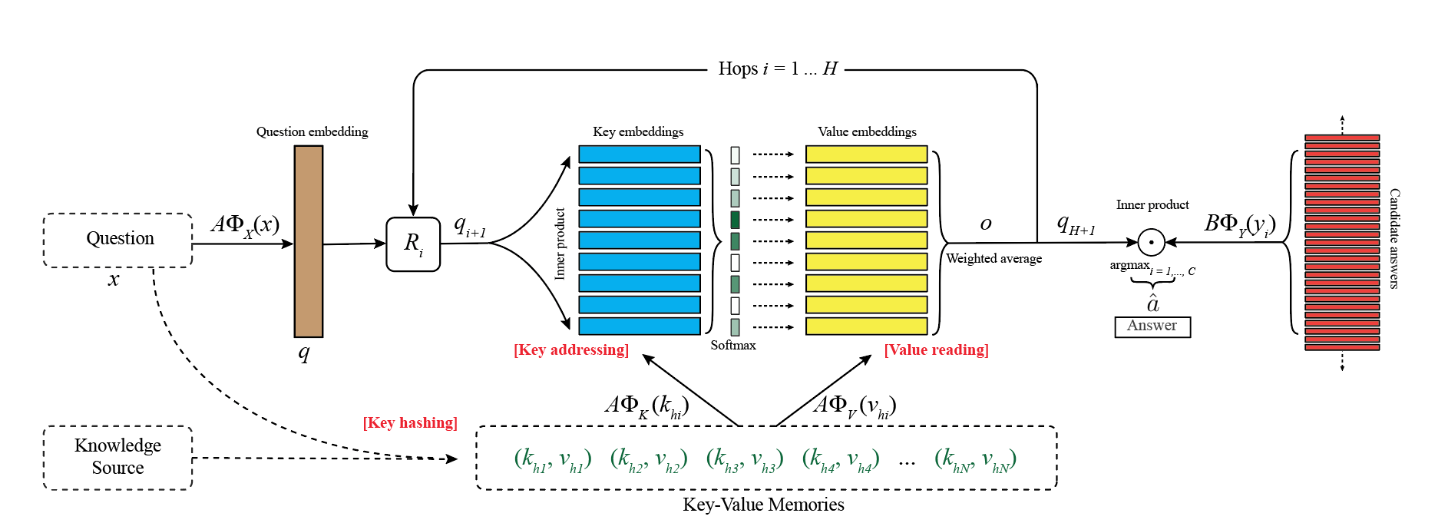

Key-Value Memory Networks

My biggest learning from this course was really appreciating how the current state of the art in LLMs with transformers came from an interative evolution of neural architectures from the NLP research community. For the final project, we were challenged to design and optimize a key-value based memory network. It was both interesting to see how we could implement such a basic concept from computer science within a differentiable neural network, and insightful to see how this concept of memory would eventually evolve into attention mechanisms in later state of the art architectures. This was one of the more challenging assignments I had to complete within OMSCS, ranking up there with training MARL agents in Reinforcement Learning.

Conclusion

CS-7650 has been one of the better courses I've taken in OMSCS. The lectures were done very well and the subject matter was very relevant given the recent surge in popularity around LLMs and NLP. I did kind of wish that the course covered more recent advances in the field like RLHF, but it was still a great foundational introduction to the field.