Redshift SQL Gotchas

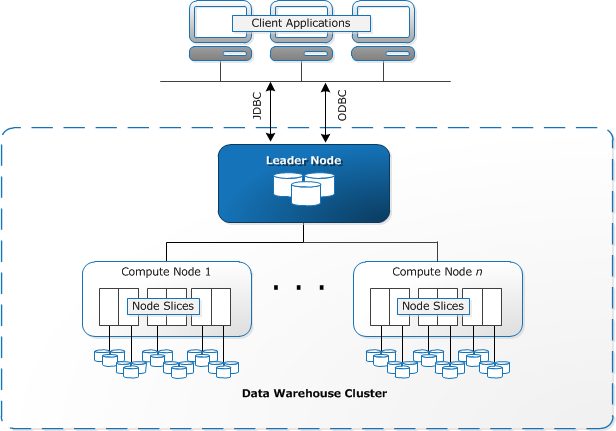

If you're working within the AWS Cloud ecosystem, you've probably had to work with AWS Redshift, which is the de-facto solution for data warehousing and supporting business intelligence, reporting, data, and analytics tools.

Here are 3 tips you should keep in mind when working with Redshift as your team's data-warehouse:

1) Primary key and Foreign Key Constraints are not enforced

Like other clustered database systems, AWS Redshift only uses primary and foreign keys as planning hints for certain statistical computations. Your ETL logic or source tables should be enforcing these constraints. Redshift is essentially a distributed column store and the main reason why it's able to batch writes and massive select and joins so quickly is because it doesn't need to handle uniqueness constraints from primary keys and indexes.

2) ISO-8601 and Timestamp Precision

An easy mistake to make with storing timestamps is to assume that storing timestamps as ISO-8601 strings, will automatically make it convertible between different databases, languages etc...

For example with the Go standard library supports nano-second precision:

package main

import (

"fmt"

"time"

)

func main() {

t := "2020-07-01T01:23:45.999999999Z"

res, err := time.Parse(time.RFC3339Nano, t)

if err == nil {

fmt.Println(res)

}

}2020-07-01 01:23:45.999999999 +0000 UTC

Program exited.If you stored this into Redshift and tried to cast it back into a DATE with something like created_at::date or TIMESTAMP you'd get the following error:

org.postgresql.util.PSQLException: ERROR: date/time field value out of rangeLooking at AWS's documentation we see that Redshift only supports 1 microsecond of precision!

To prevent issues like this, teams should ensure that their ETL code understands the source table schemas and do the appropriate transformations. Specifically for DynamoDB, even though DynamoDB itself only supports microsecond resolution, you should defensively check the string's precision with a rtrim or use to_date(created_at, 'YYYY-MM-DDTHH:MI:SS').

Teams should also access what type of precision they need with their data. In most cases, it's extremely rare that your system will actually need to be measuring time at a nanosecond precision. At those levels, you're at the mercy of system clock errors, clock drift, etc...

3) SQL Three-Valued Logic

This one isn't specific to Redshift, but is more of a tricky SQL gotcha. Lets say you have this sample query:

SELECT * from some_table WHERE name NOT IN ('a', 'b', 'c')

where name is nullable. Would you expect this to returns results where name is NULL? The query will not return any rows where name is NULL due three-valued logic. NULL is a special case where we designate data is absent and missing. From the context of a boolean expression, if we have the expression:

NULL != 'a'

Three-value logic defines the result of this as unknown since we don't actually know the value of the data. WHERE clauses must require conditions to evaluate to true.

The correct query would then be:

SELECT * from some_table WHERE name IS NULL OR name NOT IN ('a', 'b', 'c')